Learning a Depth Covariance Function

CVPR 2023

We propose learning a depth covariance function with applications to geometric vision tasks. Given RGB images as input, the covariance function can be flexibly used to define priors over depth functions, predictive distributions given observations, and methods for active point selection. We leverage these techniques for a selection of downstream tasks: depth completion, bundle adjustment, and monocular dense visual odometry.

While there are many ways to define a covariance function, we demonstrate one such choice. From an RGB image, a CNN predicts per-pixel 2D covariance matrices, and the depth covariance between two pixels is a measure of the product of these distributions. Parameterizing the covariance in this way promotes local influence.

Applications

Depth Completion

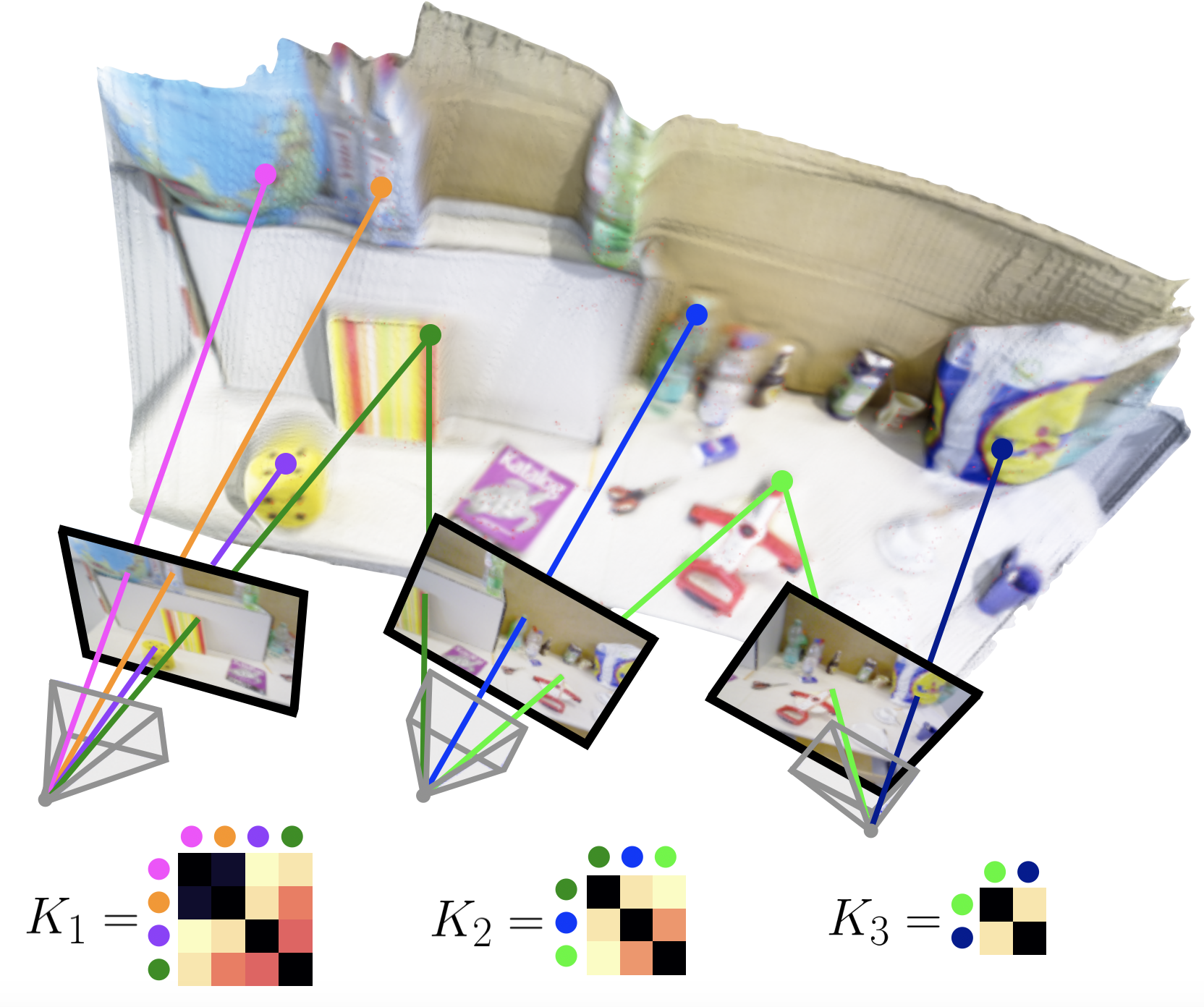

Small Baseline Bundle Adjustment and Dense Fusion

Monocular Dense Visual Odometry

Monocular Dense Visual Odometry

Bibtex

@inproceedings{dexheimer_depthcov_2023,

title={Learning a Depth Covariance Function},

author={Dexheimer, Eric and Davison, Andrew J.},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition ({CVPR})},

year={2023}

}