COMO: Compact Mapping and Odometry

Real-time monocular dense reconstruction and camera tracking using only RGB as input.

Abstract

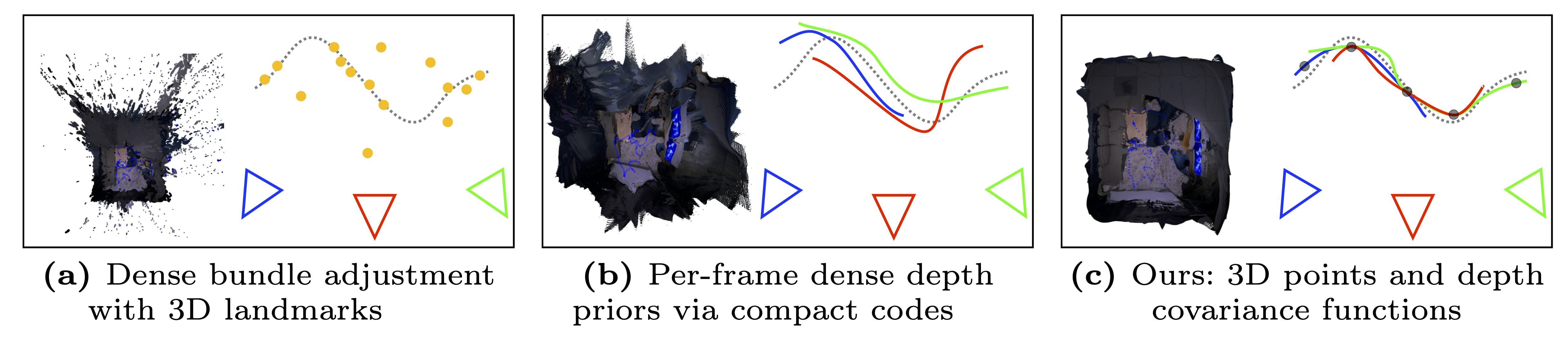

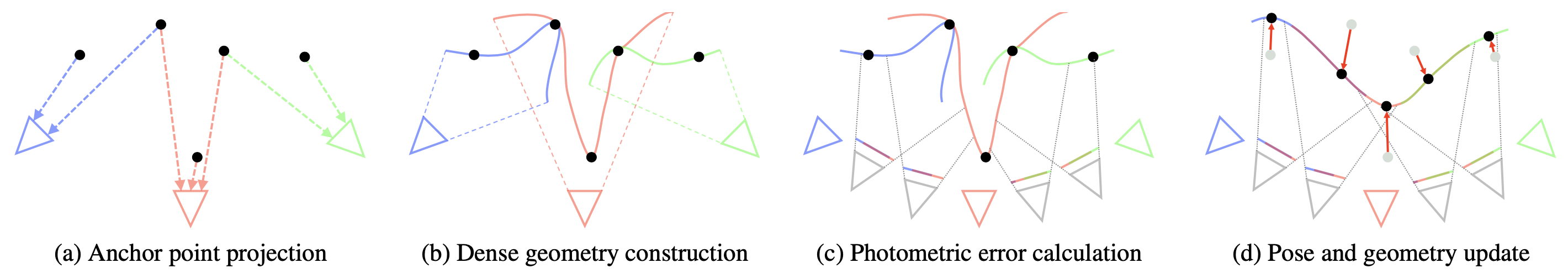

We present COMO, a real-time monocular mapping and odometry system that encodes dense geometry via a compact set of 3D anchor points. Decoding anchor point projections into dense geometry via per-keyframe depth covariance functions guarantees that depth maps are joined together at visible anchor points. The representation enables joint optimization of camera poses and dense geometry, intrinsic 3D consistency, and efficient second-order inference. To maintain a compact yet expressive map, we introduce a frontend that leverages the covariance function for tracking and initializing potentially visually indistinct 3D points across frames. Altogether, we introduce a real-time system capable of estimating accurate poses and consistent geometry.

COMO decodes dense geometry from a compact set of 3D anchor points

Dense geometry is fully parameterized by anchor points and per-keyframe depth covariance functions.

COMO achieves intrinsic 3D consistency between depth maps

COMO achieves both inter-frame and intra-frame 3D consistency by explicitly anchoring depth maps together.

COMO permits efficient optimization of poses and dense geometry

Poses and anchor points are jointly optimized via second-order minimization of dense photometric error.

COMO enables live tracking and dense mapping

System allows for robust tracking and mapping with only RGB as input.

COMO achieves consistent poses and dense geometry

Suitable for TSDF fusion while retaining ability to continually optimize geometry.

Citation

@article{dexheimer2024como,

title={{COMO}: Compact Mapping and Odometry},

author={Dexheimer, Eric and Davison, Andrew J.},

journal={arXiv preprint arXiv:2404.03531},

year={2024}

}

Acknowledgements

Research presented in this paper has been supported by Dyson Technology Ltd.

We would like to thank

Riku Murai,

Hide Matsuki,

Gwangbin Bae

and members of the Dyson Robotics Lab for research discussions and feedback.

The website template was derived from Michaël Gharbi and ReconFusion.